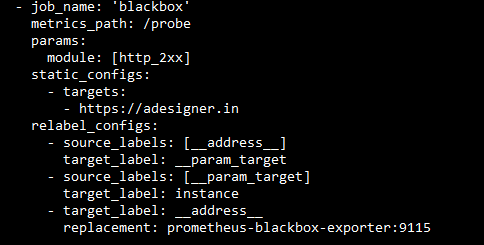

1.apm-server.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: apm-deployment

labels:

app: apm-deployment

spec:

replicas: 1

selector:

matchLabels:

app: apm-deployment

template:

metadata:

labels:

app: apm-deployment

env: prod

spec:

containers:

- name: apm-deployment

image: "elastic/apm-server:7.9.0"

imagePullPolicy: IfNotPresent

env:

- name: REGISTRY_STORAGE_DELETE_ENABLED

value: "true"

volumeMounts:

- name: apm-server-config

mountPath: /usr/share/apm-server/apm-server.yml

subPath: apm-server.yml

ports:

- containerPort: 8200

volumes:

- name: apm-server-config

configMap:

name: apm-server-config

---

kind: Service

apiVersion: v1

metadata:

name: apm-deployment-svc

labels:

app: apm-deployment-svc

spec:

type: NodePort

ports:

- name: http

port: 8200

protocol: TCP

nodePort: 30010

selector:

app: apm-deployment

---

apiVersion: v1

kind: ConfigMap

metadata:

name: apm-server-config

labels:

app: apm-server

data:

apm-server.yml: |-

apm-server:

host: "0.0.0.0:8200"

rum:

enabled: true

output.elasticsearch:

hosts: elasticsearch-service:9200Note:

1. Replace elasticsearch host as per your config

2. Only RUM js module is enabled

2. Add below code to your js file which is called in everyfile for eg. index.html

<script src="elastic-apm-rum.umd.min.js" crossorigin></script>

<script>

elasticApm.init({

serviceName: 'test-app1',

serverUrl: 'http://192.168.0.183:30010',

})

</script>

<body>

This is test-app1

</body>Note:

1. Replace serverUrl

2. Download elastic-apm-rum.umd.min.js from github

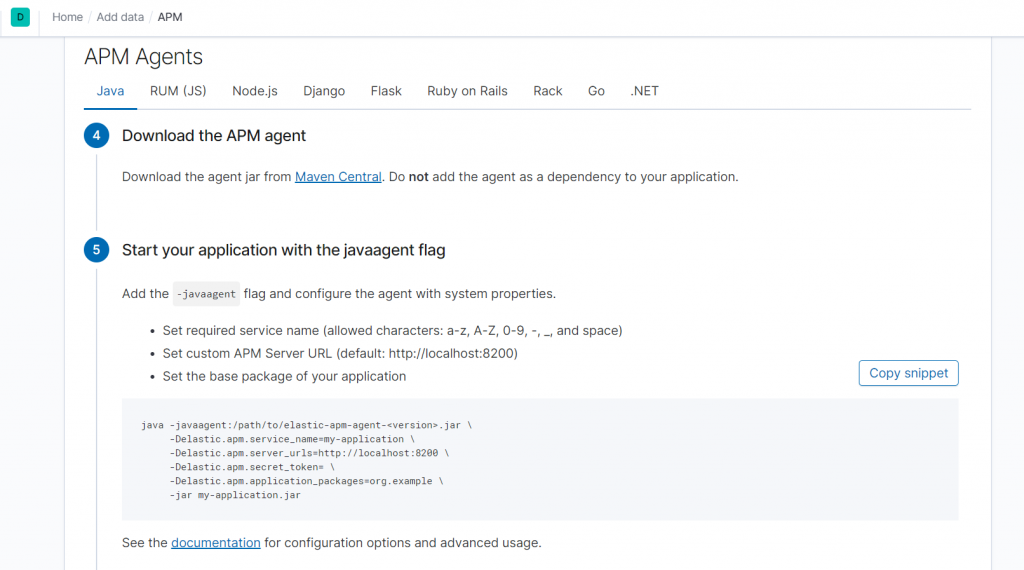

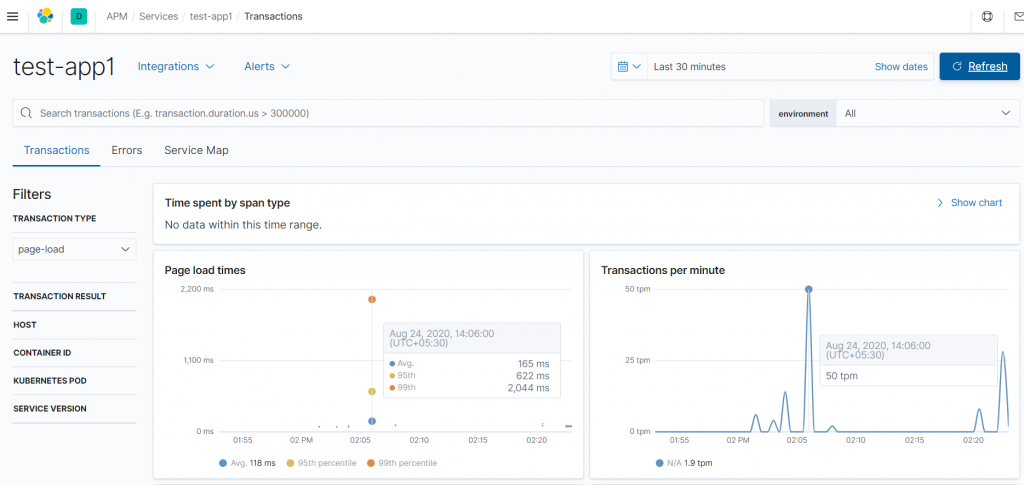

3. Kibana dashboard for APM

We can also monitor other languages apps performance