version: '2.2'

services:

elasticsearch:

image: docker.elastic.co/elasticsearch/elasticsearch:7.12.0

container_name: elasticsearch

environment:

discovery.type: "single-node"

volumes:

- /root/elasticsearch:/usr/share/elasticsearch/data

ports:

- 9200:9200

kibana:

image: docker.elastic.co/kibana/kibana:7.12.0

container_name: kibana

environment:

elasticsearch.hosts: "elasticsearch:9200"

ports:

- 5601:5601

Category: elk

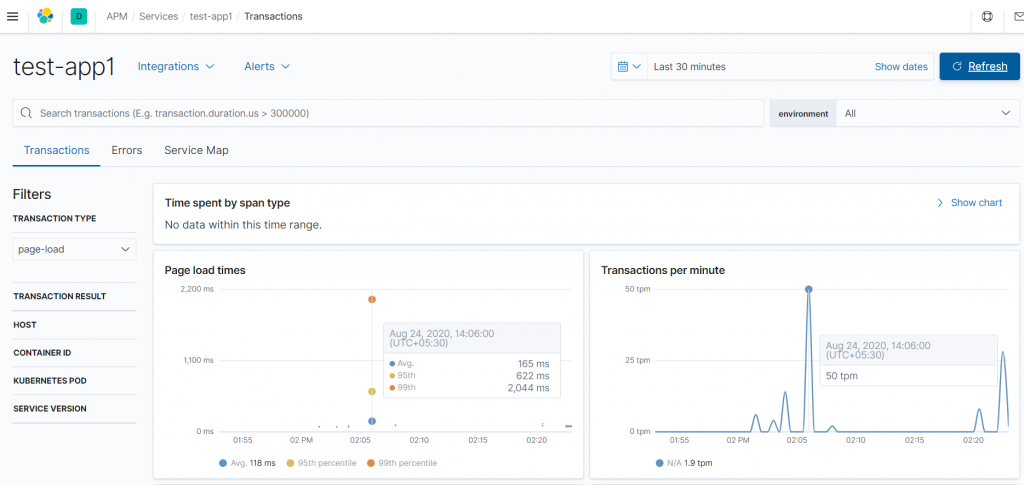

Elastic APM monitoring for javascript app on kubernetes

1.apm-server.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: apm-deployment

labels:

app: apm-deployment

spec:

replicas: 1

selector:

matchLabels:

app: apm-deployment

template:

metadata:

labels:

app: apm-deployment

env: prod

spec:

containers:

- name: apm-deployment

image: "elastic/apm-server:7.9.0"

imagePullPolicy: IfNotPresent

env:

- name: REGISTRY_STORAGE_DELETE_ENABLED

value: "true"

volumeMounts:

- name: apm-server-config

mountPath: /usr/share/apm-server/apm-server.yml

subPath: apm-server.yml

ports:

- containerPort: 8200

volumes:

- name: apm-server-config

configMap:

name: apm-server-config

---

kind: Service

apiVersion: v1

metadata:

name: apm-deployment-svc

labels:

app: apm-deployment-svc

spec:

type: NodePort

ports:

- name: http

port: 8200

protocol: TCP

nodePort: 30010

selector:

app: apm-deployment

---

apiVersion: v1

kind: ConfigMap

metadata:

name: apm-server-config

labels:

app: apm-server

data:

apm-server.yml: |-

apm-server:

host: "0.0.0.0:8200"

rum:

enabled: true

output.elasticsearch:

hosts: elasticsearch-service:9200Note:

1. Replace elasticsearch host as per your config

2. Only RUM js module is enabled

2. Add below code to your js file which is called in everyfile for eg. index.html

<script src="elastic-apm-rum.umd.min.js" crossorigin></script>

<script>

elasticApm.init({

serviceName: 'test-app1',

serverUrl: 'http://192.168.0.183:30010',

})

</script>

<body>

This is test-app1

</body>Note:

1. Replace serverUrl

2. Download elastic-apm-rum.umd.min.js from github

3. Kibana dashboard for APM

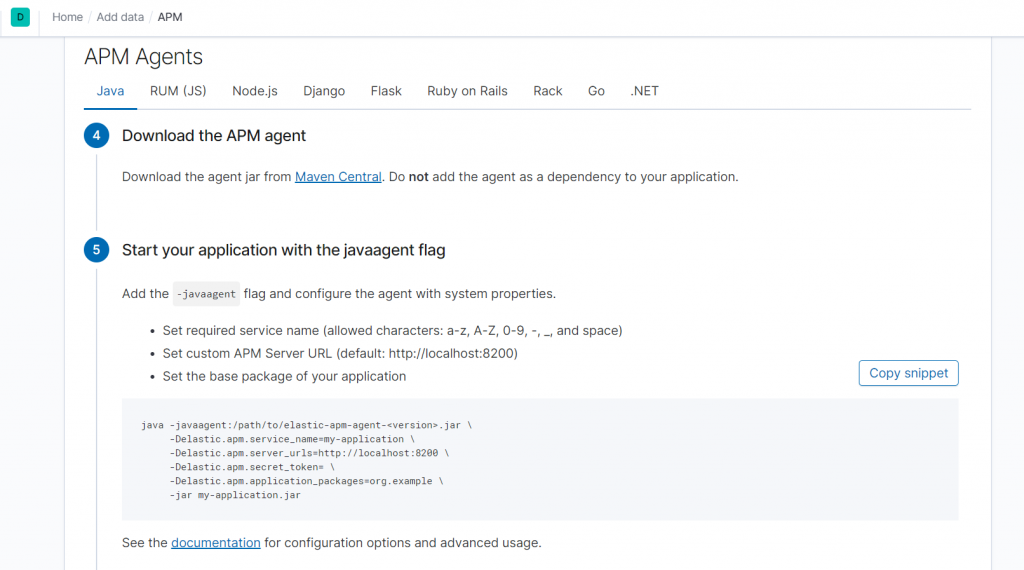

We can also monitor other languages apps performance

Run ELK on Kubernetes

1.elasticsearch.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: elasticsearch-deployment

labels:

app: elasticsearch

env: prod

spec:

replicas: 1

selector:

matchLabels:

app: elasticsearch

template:

metadata:

name: elasticsearch-deployment

labels:

app: elasticsearch

spec:

containers:

- name: elasticsearch

image: elasticsearch:7.8.0

imagePullPolicy: IfNotPresent

resources:

requests:

memory: "1024Mi"

cpu: "200m"

limits:

memory: "2048Mi"

cpu: "500m"

env:

- name: discovery.type

value: single-node

volumeMounts:

- name: elasticsearch-nfs

mountPath: /usr/share/elasticsearch/data

ports:

- name: tcp-port

containerPort: 9200

volumes:

- name: elasticsearch-nfs

nfs:

server: 192.168.0.184

path: "/opt/nfs1/elasticsearch"

---

apiVersion: v1

kind: Service

metadata:

name: elasticsearch-service

labels:

app: elasticsearch

env: prod

spec:

selector:

app: elasticsearch

type: NodePort

ports:

- name: elasticsearch

port: 9200

targetPort: 9200

nodePort: 30061

2.kibana.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana-deployment

labels:

app: kibana

env: prod

spec:

replicas: 1

selector:

matchLabels:

app: kibana

template:

metadata:

name: kibana-deployment

labels:

app: kibana

spec:

containers:

- name: kibana

image: kibana:7.8.0

imagePullPolicy: IfNotPresent

resources:

requests:

memory: "512Mi"

cpu: "200m"

limits:

memory: "1024Mi"

cpu: "400m"

env:

- name: ELASTICSEARCH_HOSTS

value: http://elasticsearch-service:9200

volumeMounts:

- name: kibana-nfs

mountPath: /usr/share/kibana/data

ports:

- name: tcp-port

containerPort: 5601

volumes:

- name: kibana-nfs

nfs:

server: 192.168.0.184

path: "/opt/nfs1/kibana"

---

apiVersion: v1

kind: Service

metadata:

name: kibana-service

labels:

app: kibana

env: prod

spec:

selector:

app: kibana

type: NodePort

ports:

- name: kibana

port: 5601

targetPort: 5601

nodePort: 300633.logstash.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: logstash-deployment

labels:

app: logstash

env: prod

spec:

replicas: 1

selector:

matchLabels:

app: logstash

template:

metadata:

name: logstash-deployment

labels:

app: logstash

spec:

containers:

- name: logstash

image: logstash:7.8.0

imagePullPolicy: IfNotPresent

resources:

requests:

memory: "512Mi"

cpu: "200m"

limits:

memory: "1024Mi"

cpu: "200m"

env:

- name: xpack.monitoring.elasticsearch.hosts

value: http://elasticsearch-service:9200

volumeMounts:

- name: logstash-nfs

mountPath: /usr/share/logstash/pipeline

ports:

- name: tcp-port

containerPort: 5044

nodeSelector:

node: lp-knode-02

volumes:

- name: logstash-nfs

nfs:

server: 192.168.0.184

path: "/opt/nfs1/logstash/pipeline"

---

apiVersion: v1

kind: Service

metadata:

name: logstash-service

labels:

app: logstash

env: prod

spec:

selector:

app: logstash

type: NodePort

ports:

- name: logstash

port: 5044

targetPort: 5044

nodePort: 30062- logstash pipeline config file for input and output and filter

02-beats-input.conf

input {

beats {

port => 5044

}

}30-elasticsearch-output.conf

output {

elasticsearch {

hosts => ["http://elasticsearch-service:9200"]

manage_template => false

index => "%{[@metadata][beat]}-%{[@metadata][version]}-%{+YYYY.MM.dd}"

}

}10-syslog-filter.conf

filter {

if [fileset][module] == "system" {

if [fileset][name] == "auth" {

grok {

match => { "message" => ["%{SYSLOGTIMESTAMP:[system][auth][timestamp]} %{SYSLOGHOST:[system][auth][hostname]} sshd(?:\[%{POSINT:[system][auth][pid]}\])?: %{DATA:[system][auth][ssh][event]} %{DATA:[system][auth][ssh][method]} for (invalid user )?%{DATA:[system][auth][user]} from %{IPORHOST:[system][auth][ssh][ip]} port %{NUMBER:[system][auth][ssh][port]} ssh2(: %{GREEDYDATA:[system][auth][ssh][signature]})?",

"%{SYSLOGTIMESTAMP:[system][auth][timestamp]} %{SYSLOGHOST:[system][auth][hostname]} sshd(?:\[%{POSINT:[system][auth][pid]}\])?: %{DATA:[system][auth][ssh][event]} user %{DATA:[system][auth][user]} from %{IPORHOST:[system][auth][ssh][ip]}",

"%{SYSLOGTIMESTAMP:[system][auth][timestamp]} %{SYSLOGHOST:[system][auth][hostname]} sshd(?:\[%{POSINT:[system][auth][pid]}\])?: Did not receive identification string from %{IPORHOST:[system][auth][ssh][dropped_ip]}",

"%{SYSLOGTIMESTAMP:[system][auth][timestamp]} %{SYSLOGHOST:[system][auth][hostname]} sudo(?:\[%{POSINT:[system][auth][pid]}\])?: \s*%{DATA:[system][auth][user]} :( %{DATA:[system][auth][sudo][error]} ;)? TTY=%{DATA:[system][auth][sudo][tty]} ; PWD=%{DATA:[system][auth][sudo][pwd]} ; USER=%{DATA:[system][auth][sudo][user]} ; COMMAND=%{GREEDYDATA:[system][auth][sudo][command]}",

"%{SYSLOGTIMESTAMP:[system][auth][timestamp]} %{SYSLOGHOST:[system][auth][hostname]} groupadd(?:\[%{POSINT:[system][auth][pid]}\])?: new group: name=%{DATA:system.auth.groupadd.name}, GID=%{NUMBER:system.auth.groupadd.gid}",

"%{SYSLOGTIMESTAMP:[system][auth][timestamp]} %{SYSLOGHOST:[system][auth][hostname]} useradd(?:\[%{POSINT:[system][auth][pid]}\])?: new user: name=%{DATA:[system][auth][user][add][name]}, UID=%{NUMBER:[system][auth][user][add][uid]}, GID=%{NUMBER:[system][auth][user][add][gid]}, home=%{DATA:[system][auth][user][add][home]}, shell=%{DATA:[system][auth][user][add][shell]}$",

"%{SYSLOGTIMESTAMP:[system][auth][timestamp]} %{SYSLOGHOST:[system][auth][hostname]} %{DATA:[system][auth][program]}(?:\[%{POSINT:[system][auth][pid]}\])?: %{GREEDYMULTILINE:[system][auth][message]}"] }

pattern_definitions => {

"GREEDYMULTILINE"=> "(.|\n)*"

}

remove_field => "message"

}

date {

match => [ "[system][auth][timestamp]", "MMM d HH:mm:ss", "MMM dd HH:mm:ss" ]

}

geoip {

source => "[system][auth][ssh][ip]"

target => "[system][auth][ssh][geoip]"

}

}

else if [fileset][name] == "syslog" {

grok {

match => { "message" => ["%{SYSLOGTIMESTAMP:[system][syslog][timestamp]} %{SYSLOGHOST:[system][syslog][hostname]} %{DATA:[system][syslog][program]}(?:\[%{POSINT:[system][syslog][pid]}\])?: %{GREEDYMULTILINE:[system][syslog][message]}"] }

pattern_definitions => { "GREEDYMULTILINE" => "(.|\n)*" }

remove_field => "message"

}

date {

match => [ "[system][syslog][timestamp]", "MMM d HH:mm:ss", "MMM dd HH:mm:ss" ]

}

}

}

}

Setup elasticsearch cluster with 3 nodes

1. Up the /etc/hosts on all 3 nodes

192.168.0.50 elk1.local

192.168.0.51 elk2.local

192.168.0.52 elk3.localNote : Minimum 2 nodes should be up to make cluster healthy.

2.Install elasticsearch on all 3 nodes

rpm --import https://artifacts.elastic.co/GPG-KEY-elasticsearch

echo '[elasticsearch-7.x]

name=Elasticsearch repository for 7.x packages

baseurl=https://artifacts.elastic.co/packages/7.x/yum

gpgcheck=1

gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearch

enabled=1

autorefresh=1

type=rpm-md' > /etc/yum.repos.d/elasticsearch.repo

###install elasticsearch

yum -y install elasticsearch

###Enable elasticsearch

systemctl enable elasticsearch3. Edit /etc/elasticsearch/elasticsearch.yml as per cluster name (eg. elk-cluster)

cluster.name: elk-cluster

node.name: elk1.local

path.data: /var/lib/elasticsearch

path.logs: /var/log/elasticsearch

network.host: 192.168.0.50

discovery.seed_hosts: ["elk1.local", "elk2.local", "elk3.local"]

cluster.initial_master_nodes: ["elk1.local", "elk2.local", "elk3.local"]change the only node.name and network.host for other 2 elasticsearch nodes

4. Restart elasticsearch service on all 3 elasticsearch node

systemctl restart elasticsearchAfter restart 1 master node will be elected.

5. Check master node in elasticsearch cluster

curl -X GET "192.168.0.50:9200/_cat/master?v&pretty"More : https://www.elastic.co/guide/en/elasticsearch/reference/current/cat-master.html

More information about setting up cluster : https://www.elastic.co/guide/en/elasticsearch/reference/current/modules-discovery.html

simple custom fields in logstash – ELK

sample log :

abc-xyz 123

abc-xyz 123

abc-xyz 123

Sending logs to sample log ELK server:

echo -n "abc-xyz 123" >/dev/tcp/ELK_SERVER_IP/2001custom1.conf

input {

tcp

{

port => 2001

type => "syslog"

}

}

filter {

grok {

match => {"message" => "%{WORD:word1}-%{WORD:word2} %{NUMBER:number1}"}

}

#add tag

mutate {

add_tag => { "word1" => "%{word1}" }

}

#add custom field

mutate {

add_field => { "logstype=" => "sample" }

}

}

output {

if [type] == "syslog" {

elasticsearch {

hosts => ["localhost:9200"]

index => "custom1-%{+YYYY.MM.dd}"

}

#for debug

#stdout {codec => rubydebug}

}

}Run logstash to collect the logs

logstash -f custom1.confNOTE:

– if number1 was already indexed as string then you have to delete the old index

– if use add_field again with same name after grok it will show double value