- Download the latest stable version of Prometheus from https://prometheus.io/download/

wget https://github.com/prometheus/prometheus/releases/download/v2.16.0/prometheus-2.16.0.linux-amd64.tar.gz

tar -xzf prometheus-2.16.0.linux-amd64.tar.gz

mv prometheus-2.16.0.linux-amd64 /etc/prometheus

mv prometheus /usr/local/bin/

mv promtool /usr/local/bin/

mv tsdb /usr/local/bin/

mv consoles /etc/prometheus/consoles

mv console_libraries /etc/prometheus/console_libraries

mkdir -p /var/lib/prometheus

2. Create Prometheus service

echo '[Unit]

Description=Prometheus Server

Documentation=https://prometheus.io/docs/introduction/overview/

Wants=network-online.target

After=network-online.target

[Service]

User=root

Group=root

Type=simple

Restart=on-failure

ExecStart=/usr/local/bin/prometheus \

--config.file=/etc/prometheus/prometheus.yml \

--storage.tsdb.path=/var/lib/prometheus \

--web.console.templates=/etc/prometheus/consoles \

--web.console.libraries=/etc/prometheus/console_libraries \

--storage.tsdb.retention.time=30d

[Install]

WantedBy=multi-user.target' > /etc/systemd/system/prometheus.service

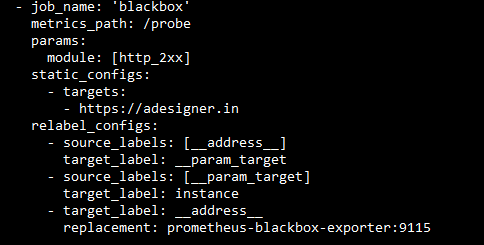

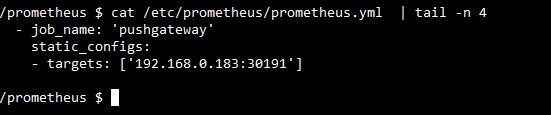

3. Configure /etc/prometheus/prometheus.yml

global:

scrape_interval: 30s

evaluation_interval: 30s

scrape_configs:

- job_name: 'server1'

static_configs:

- targets: ['localhost:9100']

- job_name: 'server2'

static_configs:

- targets: ['192.168.0.150:9100']

4. Download the latest stable node_exporter from https://prometheus.io/download/

wget https://github.com/prometheus/node_exporter/releases/download/v1.0.0-rc.0/node_exporter-1.0.0-rc.0.linux-amd64.tar.gz

tar -xzf node_exporter-1.0.0-rc.0.linux-amd64.tar.gz

mv node_exporter-1.0.0-rc.0.linux-amd64/node_exporter /usr/local/bin/

5. Create service for node_exporter

echo '[Unit]

Description=Node Exporter

Wants=network-online.target

After=network-online.target

[Service]

User=root

Group=root

Type=simple

Restart=on-failure

ExecStart=/usr/local/bin/node_exporter

[Install]

WantedBy=multi-user.target

' >/etc/systemd/system/node_exporter.service

6. Enable and start prometheus and node_exporter service

systemctl daemon-reload

systemctl enable node_exporter

systemctl enable prometheus

systemctl start prometheus

systemctl start node_exporter

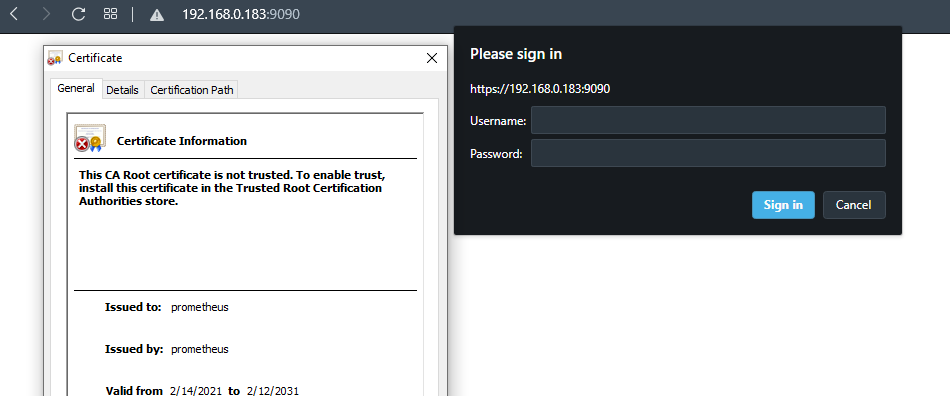

7. Prometheus will be available at http://server_ip_address:9090

8. Install Grafana

echo '[grafana]

name=grafana

baseurl=https://packages.grafana.com/oss/rpm

repo_gpgcheck=1

enabled=1

gpgcheck=1

gpgkey=https://packages.grafana.com/gpg.key

sslverify=1

sslcacert=/etc/pki/tls/certs/ca-bundle.crt' > /etc/yum.repos.d/grafana.repo

yum install grafana

systemctl start grafana-server

systemctl enable grafana-server

9. Grafana will be accessiable at http://server_ip_address:3000

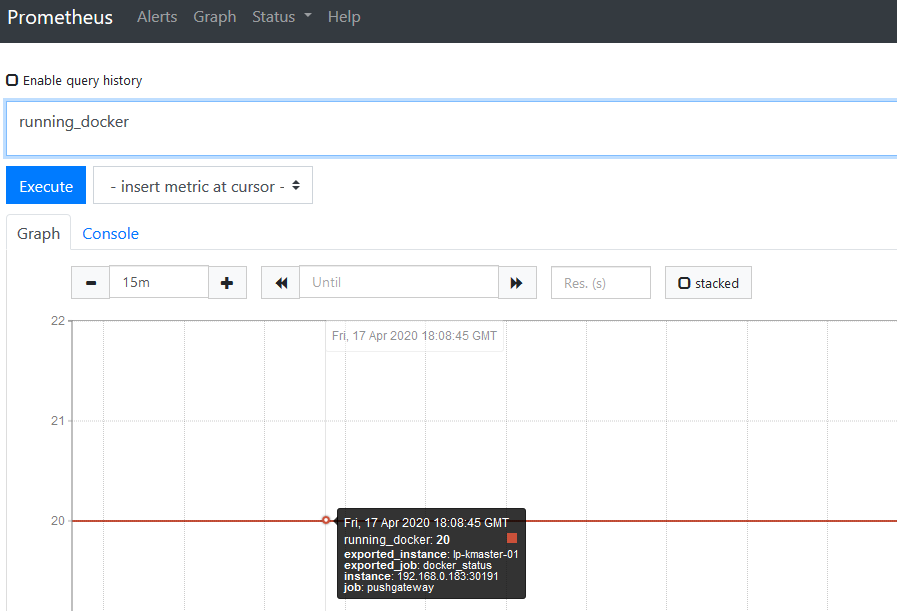

10. CPU, RAM, DISK Usage query for prometheus and grafana

#CPU:

100 - (avg by (job) (irate(node_cpu_seconds_total{mode="idle"}[5m])) * 100)

#RAM:

100 * avg by (job) (1 - ((avg_over_time(node_memory_MemFree_bytes[10m]) + avg_over_time(node_memory_Cached_bytes[10m]) + avg_over_time(node_memory_Buffers_bytes[10m])) / avg_over_time(node_memory_MemTotal_bytes[10m])))

#DISK usage:

100 - avg by (job) ((node_filesystem_avail_bytes{mountpoint="/",fstype!="rootfs"} * 100) / node_filesystem_size_bytes{mountpoint="/",fstype!="rootfs"})

#DISK read IO:

avg by (job) (irate(node_disk_read_bytes_total{device="sda"}[5m]) / 1024 / 1024)

#DISK WRITE IO:

avg by (job) (irate(node_disk_written_bytes_total{device="sda"}[1m]) / 1024 / 1024)