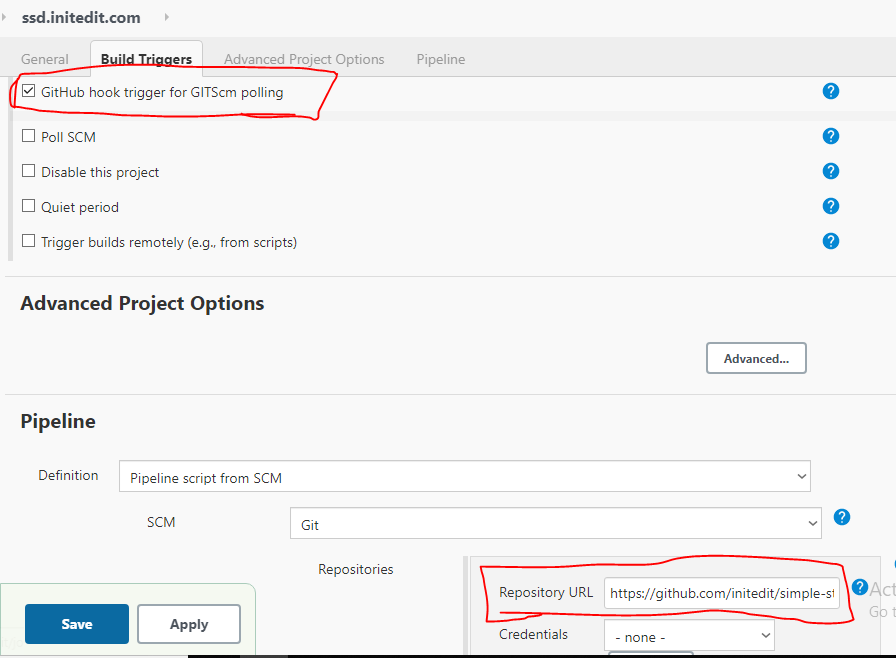

1.Project > Configure > check github hook trigger and add repository URL

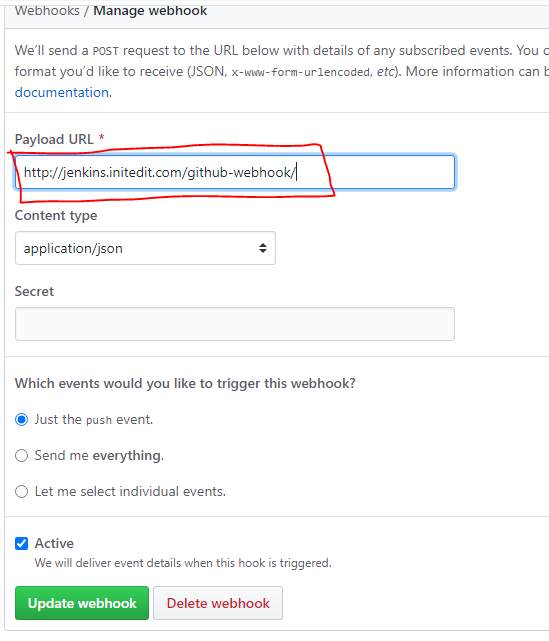

2.On Github Project > settings > Webhooks > Add webhook

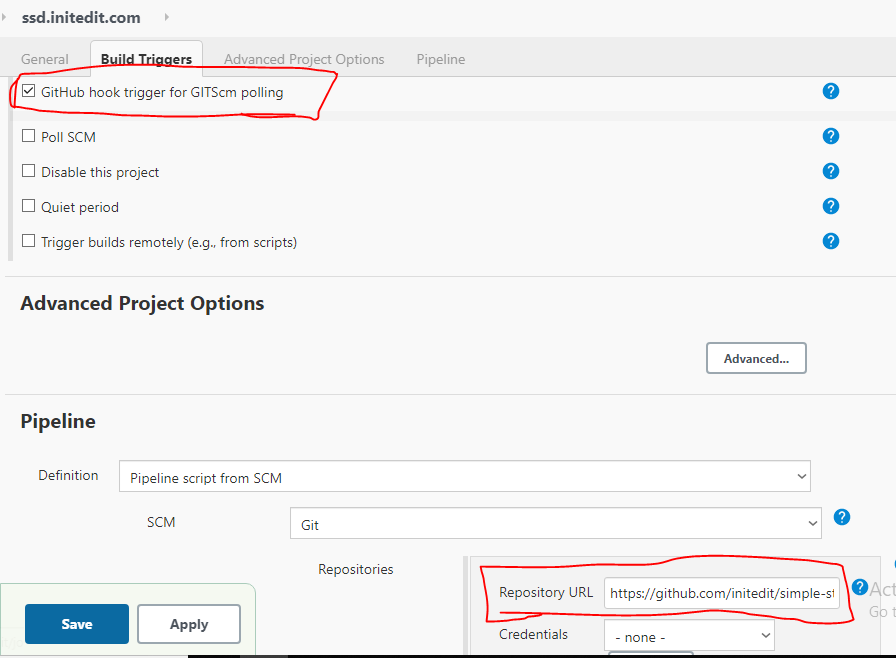

1.Project > Configure > check github hook trigger and add repository URL

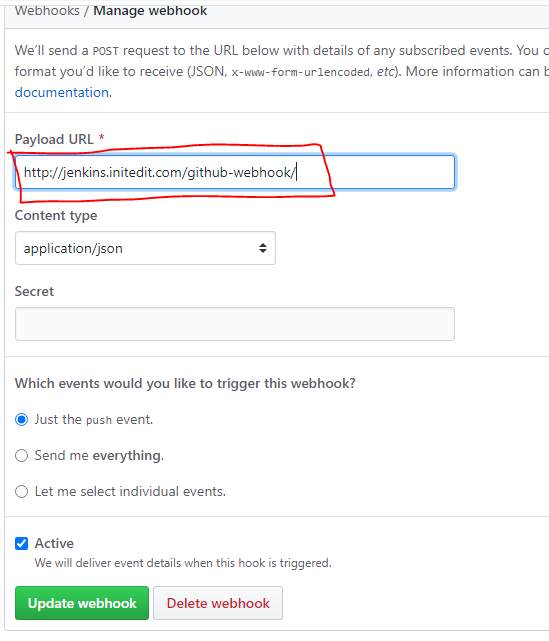

2.On Github Project > settings > Webhooks > Add webhook

1.Create serverless python3 deployment

faas-cli new --lang python3 python-fn --gateway http://192.168.0.183:31112 --prefix=192.168.0.183:30500/python-fn–prefix = Docker private repository

–gateway = Openfass gateway server

2.It will create the python-fn directory and python-fn.yml file

3.Write your python code inside python-fn/handler.py

def handle(req):

"""handle a request to the function

Args:

req (str): request body

"""

print("hola-openfaas")

return req

4.Build / Push / Deploy

faas-cli build -f python-fn.ymlfaas-cli push -f python-fn.ymlexport OPENFAAS_URL=http://192.168.0.183:31112

faas-cli login --password "YOUR_Openfaas_PASSWORD"faas-cli deploy -f python-fn.yml5. Remove

faas-cli remove -f python-fn.yml

rm -rf python-fn*Openfaas installation in k8

curl -sL https://cli.openfaas.com | sudo sh

git clone https://github.com/openfaas/faas-netes

kubectl apply -f https://raw.githubusercontent.com/openfaas/faas-netes/master/namespaces.yml

PASSWORD=$(head -c 12 /dev/urandom | shasum| cut -d' ' -f1)

kubectl -n openfaas create secret generic basic-auth \

--from-literal=basic-auth-user=admin \

--from-literal=basic-auth-password="$PASSWORD"

cd faas-netes

kubectl apply -f ./yaml

nohup kubectl port-forward svc/gateway -n openfaas 31112:8080 &

export OPENFAAS_URL=http://192.168.0.183:31112

echo -n "$PASSWORD" | faas-cli login --password-stdin

echo "$PASSWORD"Prometheus-k8.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: prometheus-deployment

labels:

app: prometheus

env: prod

spec:

replicas: 1

selector:

matchLabels:

app: prometheus

env: prod

template:

metadata:

labels:

app: prometheus

env: prod

spec:

containers:

- name: prometheus-container

image: prom/prometheus

imagePullPolicy: IfNotPresent

resources:

requests:

memory: "128Mi"

cpu: "200m"

limits:

memory: "256Mi"

cpu: "200m"

volumeMounts:

- name: config-volume

mountPath: /etc/prometheus/prometheus.yml

subPath: prometheus.yml

- name: prometheus-storage-volume

mountPath: /prometheus

ports:

- containerPort: 9090

volumes:

- name: config-volume

configMap:

name: prometheus-conf

- name: prometheus-storage-volume

nfs:

server: 192.168.0.184

path: "/opt/nfs1/prometheus"

---

kind: Service

apiVersion: v1

metadata:

name: prometheus-service

labels:

app: prometheus

env: prod

spec:

selector:

app: prometheus

env: prod

ports:

- name: promui

protocol: TCP

port: 9090

targetPort: 9090

nodePort: 30090

type: NodePortCreate prometheus.yml config-map file

kubectl create configmap game-config --from-file=/mnt/nfs1/prometheus/prometheus.ymlprometheus.yml

global:

scrape_interval: 30s

evaluation_interval: 30s

scrape_configs:

- job_name: 'lp-kmaster-01'

static_configs:

- targets: ['192.168.0.183:9100']Grafana-k8.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: grafana-deployment

labels:

app: grafana

env: prod

spec:

replicas: 1

selector:

matchLabels:

app: grafana

template:

metadata:

name: grafana-deployment

labels:

app: grafana

env: prod

spec:

containers:

- name: grafana

image: grafana/grafana:7.0.0

imagePullPolicy: IfNotPresent

resources:

requests:

memory: "128Mi"

cpu: "200m"

limits:

memory: "256Mi"

cpu: "200m"

ports:

- name: grafana

containerPort: 3000

volumeMounts:

- mountPath: /var/lib/grafana

name: grafana-storage

volumes:

- name: grafana-storage

nfs:

server: 192.168.0.184

path: "/opt/nfs1/grafana"

---

apiVersion: v1

kind: Service

metadata:

name: grafana-service

labels:

app: grafana

env: prod

spec:

selector:

app: grafana

type: NodePort

ports:

- port: 3000

targetPort: 3000

nodePort: 30091

1.Install docker

yum install docker

systemctl enable docker

systemctl start docker2. Run haproxy docker images with with persistent volume

mkdir /opt/haproxy

#and move the haproxy.cfg inside /opt/haproxy

docker run -d -p 8888:8888 -p 8404:8404 -v /opt/haproxy:/usr/local/etc/haproxy:Z haproxy3. haproxy.cfg

global

daemon

maxconn 256

defaults

timeout connect 10s

timeout client 30s

timeout server 30s

log global

mode http

option httplog

maxconn 3000

frontend stats

bind *:8404

stats enable

stats uri /stats

stats refresh 10s

frontend app1

bind *:80

default_backend app1_backend

backend app1_backend

server server1 192.168.0.151:8080 maxconn 32

server server1 192.168.0.152:8080 maxconn 32

server server1 192.168.0.153:8080 maxconn 32docker-compose file

version: '3'

services:

haproxy:

image: haproxy

ports:

- 80:80

- 8404:8404

volumes:

- /opt/haproxy:/usr/local/etc/haproxy1.Create NFS share

2. pi-hole-deployment.yml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: pi-hole-deployment

spec:

replicas: 1

selector:

matchLabels:

app: pi-hole

template:

metadata:

name: pi-hole-deployment

labels:

app: pi-hole

env: prod

spec:

containers:

- name: pi-hole

image: pihole/pihole

imagePullPolicy: IfNotPresent

resources:

requests:

memory: "256Mi"

cpu: "200m"

limits:

memory: "512Mi"

cpu: "200m"

volumeMounts:

- name: pihole-nfs

mountPath: /etc/pihole

- name: dnsmasq-nfs

mountPath: /etc/dnsmasq.d

ports:

- name: tcp-port

containerPort: 53

protocol: TCP

- name: udp-port

containerPort: 53

protocol: UDP

- name: http-port

containerPort: 80

- name: https-port

containerPort: 443

volumes:

- name: pihole-nfs

nfs:

server: 192.168.0.184

path: "/opt/nfs1/pihole/pihole"

- name: dnsmasq-nfs

nfs:

server: 192.168.0.184

path: "/opt/nfs1/pihole/dnsmasq.d"

---

apiVersion: v1

kind: Service

metadata:

name: pi-hole-service

labels:

app: pi-hole

env: prod

spec:

selector:

app: pi-hole

type: NodePort

externalIPs:

- 192.168.0.183

ports:

- name: dns-tcp

port: 53

targetPort: 53

nodePort: 30053

protocol: TCP

- name: dns-udp

port: 53

targetPort: 53

nodePort: 30053

protocol: UDP

- name: http

port: 800

targetPort: 80

nodePort: 30054

- name: https

port: 801

targetPort: 443

nodePort: 30055

Note: Use externalIPs in service so that the IP can be put inside wifi DNS.

apiVersion: apps/v1

kind: Deployment

metadata:

name: registry-deployment

labels:

app: registry

env: prod

spec:

replicas: 1

selector:

matchLabels:

app: registry

env: prod

template:

metadata:

labels:

app: registry

env: prod

spec:

containers:

- name: registry-container

image: registry:2

imagePullPolicy: IfNotPresent

env:

- name: REGISTRY_STORAGE_DELETE_ENABLED

value: "true"

resources:

requests:

memory: "256Mi"

cpu: "200m"

limits:

memory: "512Mi"

cpu: "200m"

volumeMounts:

- name: registry-data

mountPath: /var/lib/registry

- name: config-yml

mountPath: /etc/docker/registry/config.yml

subPath: config.yml

ports:

- containerPort: 5000

volumes:

- name: registry-data

nfs:

server: 192.168.0.184

path: "/opt/nfs1/registry"

- name: config-yml

configMap:

name: registry-conf

---

kind: ConfigMap

apiVersion: v1

metadata:

name: registry-conf

data:

config.yml: |+

version: 0.1

log:

fields:

service: registry

storage:

filesystem:

rootdirectory: /var/lib/registry

http:

addr: :5000

headers:

X-Content-Type-Options: [nosniff]

health:

storagedriver:

enabled: true

interval: 10s

threshold: 3

---

kind: Service

apiVersion: v1

metadata:

name: registry-service

labels:

app: registry

env: prod

spec:

selector:

app: registry

env: prod

ports:

- name: registry

protocol: TCP

port: 5000

targetPort: 5000

nodePort: 30500

type: NodePort2.As this is running on http we need to add insecure registry inside /etc/docker/daemon.json on all running worker node and

{

"insecure-registries" : [ "192.168.0.183:30500" ]

}3. Restart the docker service

systemctl restart docker4. Tag the image that with registry server ip and port. DNS name can be used if available.

docker tag debian:latest 192.168.0.183:30500/debianlocal:latest5. Push the images to private registry server

docker push 192.168.0.183:30500/debianlocal:latest6. Delete images form registry server we will use docker_reg_tool https://github.com/byrnedo/docker-reg-tool/blob/master/docker_reg_tool

Note:

– Delete blobdescriptor: inmemory part from /etc/docker/registry/config.yml which is already have done in this example

– REGISTRY_STORAGE_DELETE_ENABLED = “true” should be present in env

./docker_reg_tool http://192.168.0.183:30500 delete debianlocal latest

#This can be cronjob inside the container

docker exec -it name_of_registory_container bin/registry garbage-collect /etc/docker/registry/config.yml

1.Deploy pushgateway to kubernetes

pushgateway.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: pushgateway-deployment

labels:

app: pushgateway

env: prod

spec:

replicas: 1

selector:

matchLabels:

app: pushgateway

env: prod

template:

metadata:

labels:

app: pushgateway

env: prod

spec:

containers:

- name: pushgateway-container

image: prom/pushgateway

imagePullPolicy: IfNotPresent

resources:

requests:

memory: "128Mi"

cpu: "200m"

limits:

memory: "256Mi"

cpu: "200m"

ports:

- containerPort: 9091

---

kind: Service

apiVersion: v1

metadata:

name: pushgateway-service

labels:

app: pushgateway

env: prod

spec:

selector:

app: pushgateway

env: prod

ports:

- name: pushgateway

protocol: TCP

port: 9091

targetPort: 9091

nodePort: 30191

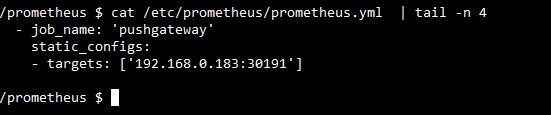

type: NodePort2. Add pushgateway in /etc/prometheus/prometheus.yml

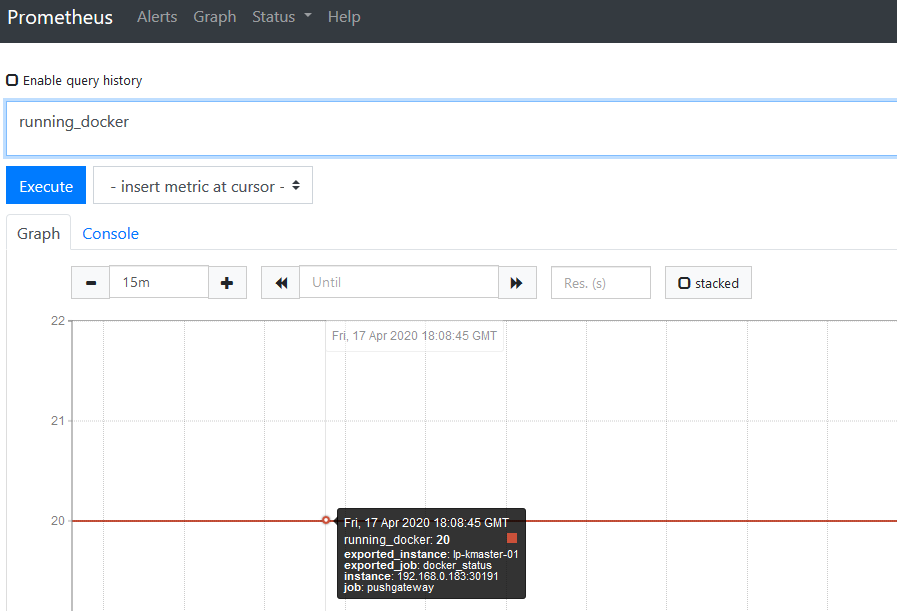

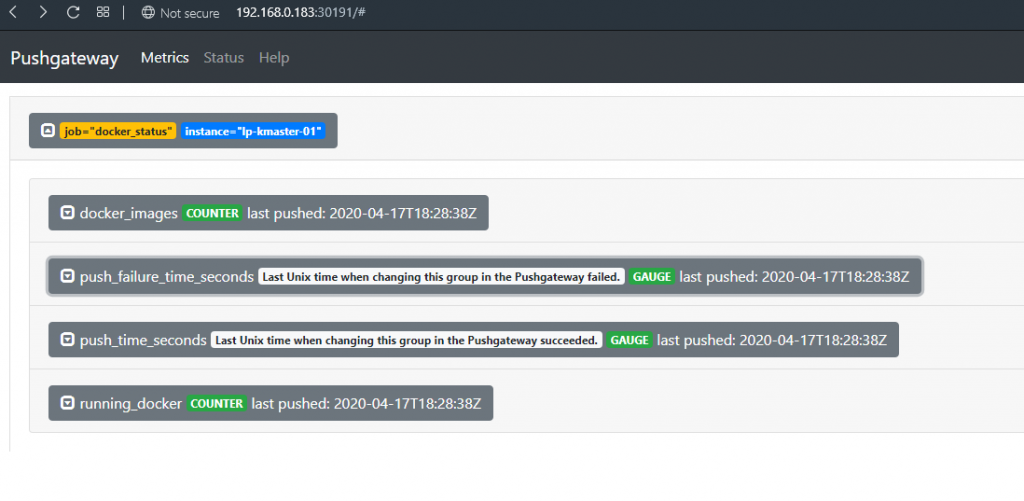

3. Push running docker status to pushgateway using below bash script and add it to crontab

job="docker_status"

running_docker=$(docker ps | wc -l)

docker_images=$(docker images | wc -l)

cat <<EOF | curl --data-binary @- http://192.168.0.183:30191/metrics/job/$job/instance/$(hostname)

# TYPE running_docker counter

running_docker $running_docker

docker_images $docker_images

EOF4. Data visualization in prometheus and pushgateway server

Python code:

job_name='cpuload'

instance_name='web1'

payload_key='cpu'

payload_value='10'

#print("{k} {v} \n".format(k=payload_key, v=payload_value))

#print('http://192.168.0.183:30191/metrics/job/{j}/instance/{i}'.format(j=job_name, i=instance_name))

response = requests.post('http://192.168.0.183:30191/metrics/job/{j}/instance/{i}'.format(j=job_name, i=instance_name), data="{k} {v}\n".format(k=payload_key, v=payload_value))

#print(response.text)pushgateway powershell command:

Invoke-WebRequest "http://192.168.0.183:30191/metrics/job/jenkins/instance/instace_name -Body "process 1`n" -Method Post$process1 = (tasklist /v | Select-String -AllMatches 'Jenkins' | findstr 'java' | %{ $_.Split('')[0]; }) | Out-String

if($process1 -like "java.exe*"){

write-host("This is if statement")

Invoke-WebRequest "http://192.168.0.183:30191/metrics/job/jenkins/instance/instace_name" -Body "jenkins_process 1`n" -Method Post

}else {

write-host("This is else statement")

Invoke-WebRequest "http://192.168.0.183:30191/metrics/job/jenkins/instance/instace_name" -Body "jenkins_process 0`n" -Method Post

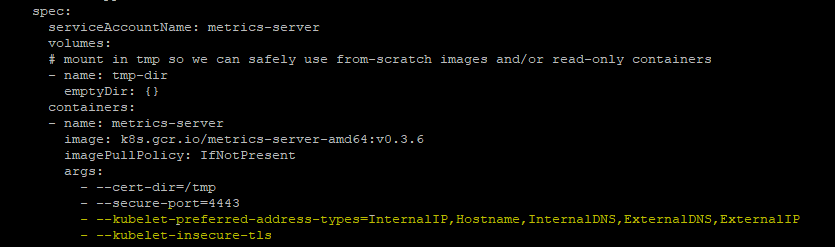

}1. Get the metrics server code form github

git clone https://github.com/kubernetes-sigs/metrics-server

cd metrics-server

#Edit metrics-server-deployment.yaml

vi deploy/kubernetes/metrics-server-deployment.yaml

#And add below args

args:

- --kubelet-preferred-address-types=InternalIP,Hostname,InternalDNS,ExternalDNS,ExternalIP

- --kubelet-insecure-tlsmetrics-server-deployment.yaml will look like below

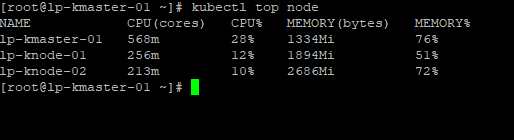

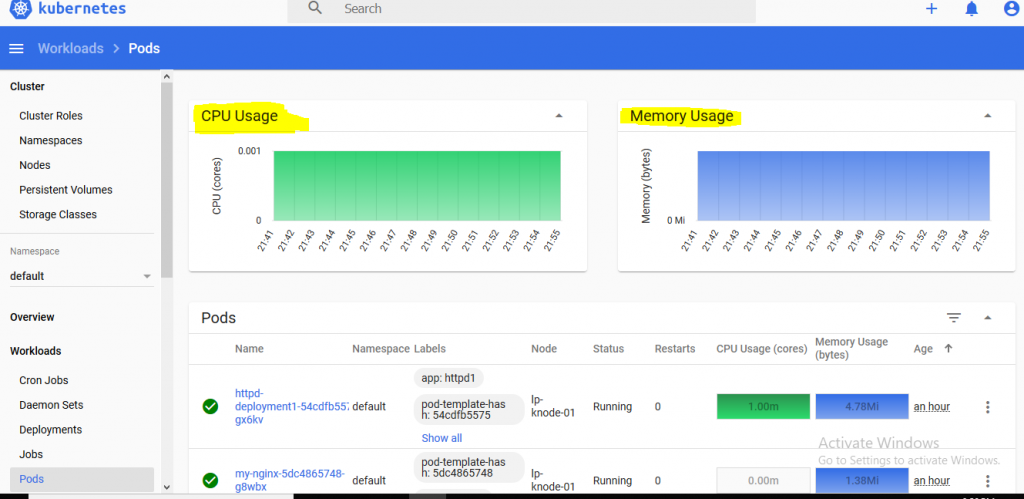

2. After deployment we will get the cpu and ram usage of node as below

3.Now we can write Horizontal Pod Autoscaler as below that will auto scale nginx-app1 deplyment if cpu usage will get above 80% max 5 pods.

– It’s checks every 30 seconds for scaling the deployment

– It’s scale downs the deployment after 300 seconds if the load goes down

kind: HorizontalPodAutoscaler

apiVersion: autoscaling/v1

metadata:

name: nginx-app1-hpa

spec:

scaleTargetRef:

kind: Deployment

name: nginx-app1

apiVersion: apps/v1

minReplicas: 1

maxReplicas: 5

targetCPUUtilizationPercentage: 804. nginx-app1.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-app1

spec:

selector:

matchLabels:

run: nginx-app1

replicas: 2

template:

metadata:

labels:

run: nginx-app1

spec:

containers:

- name: nginx-app1

image: nginx

resources:

requests:

memory: "128Mi"

cpu: "100m"

limits:

memory: "256Mi"

cpu: "200m"

ports:

- containerPort: 80

---

kind: Service

apiVersion: v1

metadata:

name: nginx-app1-svc

labels:

run: nginx-app1-svc

spec:

ports:

- protocol: TCP

port: 80

targetPort: 80

nodePort: 30083

selector:

run: nginx-app1

type: NodePort5. Random load generator

while(true)

do

curl -s http://SERVICE_NAME

curl -s http://SERVICE_NAME

curl -s http://SERVICE_NAME

curl -s http://SERVICE_NAME

curl -s http://SERVICE_NAME

donenginx-kibana-deplyment.yml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-kibana-deployment

labels:

app: nginx-kibana

env: prod

spec:

replicas: 1

selector:

matchLabels:

app: nginx-kibana

env: prod

template:

metadata:

labels:

app: nginx-kibana

env: prod

spec:

containers:

- name: nginx-container

image: nginx

imagePullPolicy: IfNotPresent

resources:

requests:

memory: "128Mi"

cpu: "200m"

limits:

memory: "256Mi"

cpu: "200m"

volumeMounts:

- name: nginx-conf

mountPath: /etc/nginx/nginx.conf

subPath: nginx.conf

- name: nginx-admin-htpasswd

mountPath: /etc/nginx/admin-htpasswd

subPath: admin-htpasswd

ports:

- containerPort: 80

volumes:

- name: nginx-conf

configMap:

name: nginx-conf

- name: nginx-admin-htpasswd

configMap:

name: nginx-admin-htpasswd

---

kind: Service

apiVersion: v1

metadata:

name: nginx-kibana-service

labels:

app: nginx-kibana

env: prod

spec:

selector:

app: nginx-kibana

env: prod

ports:

- name: kibana-ui

protocol: TCP

port: 80

targetPort: 80

nodePort: 30081

type: NodePortnginx.conf

events { }

http

{

upstream kibana

{

server 192.168.0.183:30063;

server 192.168.0.184:30063;

server 192.168.0.185:30063;

}

server

{

listen 80;

location / {

auth_basic "kibana admin";

auth_basic_user_file /etc/nginx/admin-htpasswd;

proxy_pass http://kibana;

}

}

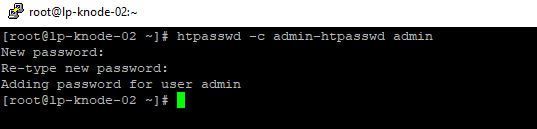

}Create admin-htpasswd auth file with htpasswd for admin user.

yum install httpd-tools

htpasswd -c admin-htpasswd admin

-Create config maps in kubernetes for above files

( nginx.conf, admin-htpasswd )

kubectl create configmap nginx-conf --from-file nginx.conf

kubectl create configmap nginx-admin-htpasswd --from-file admin-htpasswdyum install epel-release

yum install nginx

systemctl start nginx

systemctl enable nginxvi /etc/nginx/nginx.conf

events { }

http {

upstream api {

#least_conn; #other options are also available

server 192.168.0.57:6443;

server 192.168.0.93:6443 weight=3;

server 192.168.0.121:6443 max_fails=3 fail_timeout=30;

}

server {

listen 8888 ssl;

ssl_certificate test.crt;

ssl_certificate_key test.key;

location / {

proxy_pass https://api;

}

server {

listen 80 default;

location / {

proxy_pass https://api;

}

}

}

Loadbalancing algorithm:

least_conn

ip_hash

weight=5

max_fails and fail_timeoutMore on : http://nginx.org/en/docs/http/load_balancing.html#nginx_load_balancing_health_checks