kvm-ok

apt install qemu-kvm libvirt-daemon-system

apt install virt-manager

virt-managerhttps://documentation.ubuntu.com/server/how-to/virtualisation/libvirt/

kvm-ok

apt install qemu-kvm libvirt-daemon-system

apt install virt-manager

virt-managerhttps://documentation.ubuntu.com/server/how-to/virtualisation/libvirt/

[root@lp-knode-1 ~]# date

Tue Mar 4 19:32:46 IST 2025

[root@lp-knode-1 ~]# timedatectl

Local time: Tue 2025-03-04 19:33:01 IST

Universal time: Tue 2025-03-04 14:03:01 UTC

RTC time: Tue 2025-03-04 14:27:56

Time zone: Asia/Kolkata (IST, +0530)

System clock synchronized: no

NTP service: inactive

RTC in local TZ: no

[root@lp-knode-1 ~]# timedatectl set-ntp on

[root@lp-knode-1 ~]# timedatectl status

Local time: Tue 2025-03-04 20:00:13 IST

Universal time: Tue 2025-03-04 14:30:13 UTC

RTC time: Tue 2025-03-04 14:30:13

Time zone: Asia/Kolkata (IST, +0530)

System clock synchronized: yes

NTP service: active

RTC in local TZ: no

[root@lp-knode-1 ~]# date

Tue Mar 4 20:00:17 IST 2025

Ollama it has similar pattern as docker.

curl -L https://ollama.com/download/ollama-linux-amd64.tgz -o ollama-linux-amd64.tgz

sudo tar -C /usr -xzf ollama-linux-amd64.tgz

ollama serve

# OR host on different ip

OLLAMA_HOST=192.168.29.13:11435 ollama serve

ollama -v

#check graphics card

nvidia-smi

#port http://127.0.0.1:11434/

###Podman/Docker - https://ollama.com/blog/ollama-is-now-available-as-an-official-docker-image

podman run -d --gpus=all --device nvidia.com/gpu=all --security-opt=label=disable -v ollama:/root/.ollama -p 11434:11434 ollama/ollamauser@home:~$ ollama list

NAME ID SIZE MODIFIED

gemma2:latest ff02c3702f32 5.4 GB 11 hours ago

llama3.2:latest a80c4f17acd5 2.0 GB 12 hours ago

user@home:~$ ollama run llama3.2

>>> hola

Hola! ¿En qué puedo ayudarte hoy?

>>> hey

What's up? Want to chat about something in particular or just shoot the breeze?

[Unit]

Description=Ollama Service

After=network-online.target

[Service]

ExecStart=/usr/local/bin/ollama serve

User=ollama

Group=ollama

Restart=always

RestartSec=3

Environment="PATH=/usr/local/vitess/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:/usr/games:/usr/local/games:/snap/bin:/root/cardano:/root/cardano/bin"

Environment="OLLAMA_HOST=http://192.168.29.13:11434"

[Install]

WantedBy=default.target

podman run -d -p 3000:8080 --gpus all --device nvidia.com/gpu=all --security-opt=label=disable -e OLLAMA_BASE_URL=http://192.168.29.13:11434 -e WEBUI_AUTH=False -v open-webui:/app/backend/data --name open-webui ghcr.io/open-webui/open-webui:mainhttps://github.com/ollama/ollama/blob/main/docs/linux.md

for podman GPU access cdi- https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/latest/cdi-support.html

https://docs.nvidia.com/datacenter/cloud-native/container-toolkit/latest/cdi-support.htmldocker-compose-web.yml

services:

open-webui:

image: ghcr.io/open-webui/open-webui:main

deploy:

resources:

reservations:

devices:

- driver: nvidia

count: all

capabilities: [gpu]

restart: always

container_name: open-webui

environment:

- OLLAMA_BASE_URL=http://192.168.29.142:11434

- WEBUI_AUTH=False

volumes:

- open-webui:/app/backend/data

ports:

- 3000:8080

volumes:

open-webui:

docker run --rm --gpus all ubuntu nvidia-smiuser@home:~$ cat /proc/driver/nvidia/version

NVRM version: NVIDIA UNIX x86_64 Kernel Module 550.120 Fri Sep 13 10:10:01 UTC 2024

apt install nvidia-utils-550

nvtopEncyrtion tool:

UI:

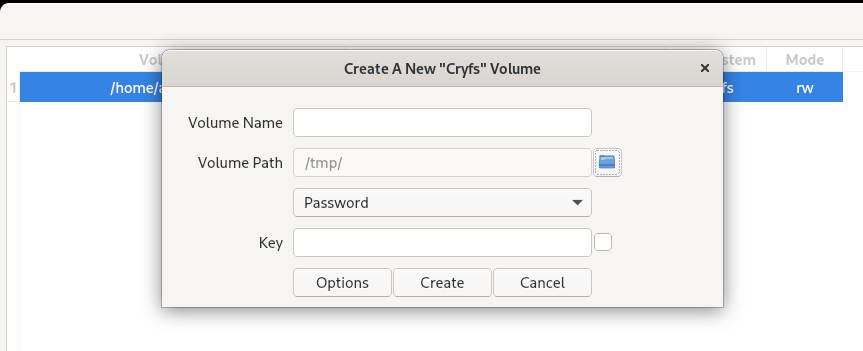

Create volume > cryfs > Vloume path(This is where excerpted data will be stored) > Volume name(eg. test-cryfs) > Password.Key > Create

df -hT

#view mount directory. Copy your data to mount directory

[home@home ~]$ df -hT

Filesystem Type Size Used Avail Use% Mounted on

devtmpfs devtmpfs 32G 0 32G 0% /dev

tmpfs tmpfs 32G 345M 31G 2% /dev/shm

tmpfs tmpfs 13G 2.1M 13G 1% /run

/dev/mapper/fedora_localhost--live-root00 ext4 184G 174G 1.8G 99% /

tmpfs tmpfs 32G 32M 32G 1% /tmp

/dev/nvme0n1p2 ext4 974M 210M 698M 24% /boot

/dev/nvme0n1p1 vfat 599M 14M 585M 3% /boot/efi

tmpfs tmpfs 6.3G 188K 6.3G 1% /run/user/1000

cryfs@/home/home/cryfs-test fuse.cryfs 1.8G 32K 1.8G 1% /home/home/.SiriKali/cryfs-test

Options = Select algo for encryption

Onedrive client: https://github.com/abraunegg/onedrive/

ls /proccat /proc/<process_id>/cmdlinecat /proc/<process_id>/fd/1

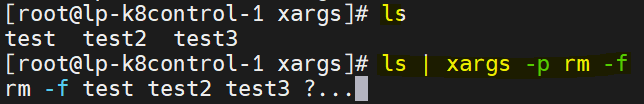

cat /proc/<process_id>/fd/2xargs pass the output(stdout) of first command to second command as argument.

ls | xargs rm -f This will remove all file listed by ls command

-p flag. It’s like dry-run.[root@lp-k8control-1 xargs]# ls

test test2 test3

[root@lp-k8control-1 xargs]# ls | xargs -p rm -f

rm -f test test2 test3 ?...

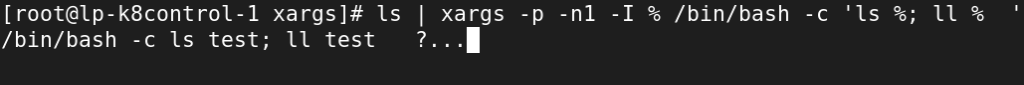

flag -n1 = one at a time

[root@lp-k8control-1 xargs]# ls | xargs -p -n1 rm -f

rm -f test ?...

flag -I % = run multiple command

[root@lp-k8control-1 xargs]# ls | xargs -p -n1 -I % /bin/bash -c 'ls %; ll % '

/bin/bash -c ls test; ll test ?...y

test

Why?

– Run process in background

– Run database backup in background

Tmux:

#List

tmux ls

#Start session

tmux new -s mysession

#Reconnect

tmux a -t session_name

#Disconnect

ctrl + b + D

#Reconnect to 0 session

tmux a -t 0

More : https://tmuxcheatsheet.com

Screen :

#List

screen -ls

#Named session

screen -A -m -d -S session_name command

#Reconnect to named session

screen -r session_name

#Disconnect

CTRL + a + dhttps://gist.github.com/jctosta/af918e1618682638aa82

Nohup:

Nohup command &It’s create nohup.out file in same directory with all command logs

Jobs:

jobs

fg

bgstrings /lib64/libc.so.6 |grep GLIBC

strings /bin/ls

Usage: strings [option(s)] [file(s)]

Display printable strings in [file(s)] (stdin by default)

The options are:

-a - --all Scan the entire file, not just the data section [default]

-d --data Only scan the data sections in the file

-f --print-file-name Print the name of the file before each string

-n --bytes=[number] Locate & print any NUL-terminated sequence of at

-<number> least [number] characters (default 4).

-t --radix={o,d,x} Print the location of the string in base 8, 10 or 16

-w --include-all-whitespace Include all whitespace as valid string characters

-o An alias for --radix=o

-T --target=<BFDNAME> Specify the binary file format

-e --encoding={s,S,b,l,B,L} Select character size and endianness:

s = 7-bit, S = 8-bit, {b,l} = 16-bit, {B,L} = 32-bit

-s --output-separator=<string> String used to separate strings in output.

@<file> Read options from <file>

-h --help Display this information

-v -V --version Print the program's version number

strings: supported targets: elf64-x86-64 elf32-i386 elf32-iamcu elf32-x86-64 a.out-i386-linux pei-i386 pei-x86-64 elf64-l1om elf64-k1om elf64-little elf64-big elf32-little elf32-big pe-x86-64 pe-bigobj-x86-64 pe-i386 plugin srec symbolsrec verilog tekhex binary ihex

dd bs=4M if=/home/input.iso of=/dev/sd[?] conv=fdatasync status=progress

[?] = Run lsblk and find your USB